Artificial Intelligence is ubiquitous. AI significantly impacts every field in this digitized world, from agriculture to self-driven cars to your Netflix suggestions. For AI to work, high-quality data is fundamental.

As per a McKinsey report, 44% of firms that used Artificial Intelligence technologies saw a decrease in business expenses and an increase in revenue. One might wonder, if deploying AI technologies have tremendous benefits, why is everybody not using them? Well, only 3% of companies ensure the basic data quality standards. So, the one thing that stops organizations from adopting AI to their business is the lack of high-quality data.

Let’s look at data quality’s role in AI models before analyzing how to achieve high-quality data in businesses.

Role of data quality in AI

Assume you’ve started working on developing an accurate AI model and have been given a massive dataset. You notice several data quality issues as soon as you begin your work. So you fix them and re-run the query to create a precise AI model. But what if these issues continue to repeat? You would not have hemmed and hawed about insufficient quality data if you had a data quality report at the start of the project.

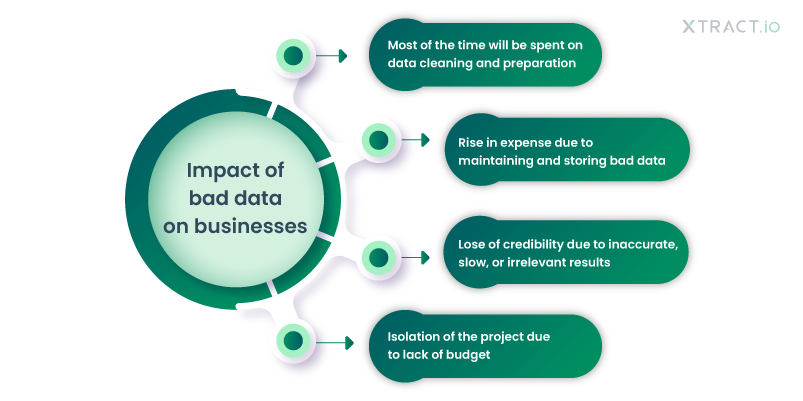

According to the IBM executive, poor quality data is one of the primary reasons for halting or canceling AI projects. Organizations eventually lose patience and the funding to achieve the desired result. One cannot achieve the desired outcome without proper data analysis.

AI models are no longer limited to computer gaming. AI is ruling every industry, including healthcare. AI aids decision-making, including disease diagnosis, determining who needs surgery, and performing surgery. So one data flaw might cost you a life. Organizations are investing in cutting-edge algorithms to power these AI models, but the first step is to provide the models with high-quality data.

What happens if one feeds poor-quality data?

Have you ever thought about how accurate Spotify’s recommendations are? They appear to comprehend your mood and make specific music recommendations. They provide a high level of personalization based on the centralized data they collect from the moment you sign up. If a minor glitch in the system causes the recommendation to collapse, you will not feel the same way about Spotify, and it will lose its brand image. So, high-quality data is a prerequisite for AI models.

According to a TechRepublic survey, 59 percent of participating enterprises miscalculated demand due to invalid data. Furthermore, 26 percent of respondents targeted the wrong prospects. The problem with incorrect data is that we are training AI models with false information, making it difficult for the AI machine to deliver accurate results and ensure data accuracy.

One major issue is when organizations identify bad data, instead of resolving it, they feed more data in the hope that it will solve the problem. Having a small amount of high-quality data is more important than having access to a large dataset. Poor quality data necessitates using complex AI models to ensure that even disorganized sets work together to produce accurate results.

How to ensure high data quality in AI models?

Manually checking and ensuring data quality is an option. Still, when dealing with gigabytes or petabytes of data, you must choose an intuitive and automatic data quality tool to perform the job and ensure high quality.

Here are a few steps to ensure high quality.

Know your data source

To ensure data quality throughout the process, organizations should identify, understand, and analyze data sources and document all data source information. Information such as where the data came from, what type of data it is, when it was last updated, and who is involved helps the data scientist precisely analyze the dataset before feeding it to the AI models.

Profile your datasets

The next step in ensuring that your AI models produce the desired results is to profile your data. This method analyzes and learns more about the data fed into your systems. Steps in data profiling look for inconsistencies, missing values, and outliers. It allows you to determine whether or not the dataset is usable and ensures that you have high-quality data.

Perform data quality checks

Organizations should be able to quickly validate any database based on domains and ensure high data accuracy using a robust data quality tool and a central library of pre-built rules. It should be capable of flagging and potentially enriching the original incoming data. Data quality can be measured and analyzed over time using this approach, and organizations can ensure that the data fed into AI models are of high quality.

Ready to design accurate AI models?

Organizations are investing in AI projects to provide customized services, solicit real-time responses, increase profit, and reduce costs. To carve out a niche in this competitive market, you must train and feed your AI models with high-quality data. Quality data is no longer an option!

If you want to invest in AI technologies, first regularly analyze and ensure your data’s quality with robotic data quality platforms. We’ve got you if you are unsure which data quality platforms best meet your requirements. We offer Freda, an intuitive data quality platform at Xtract.io, to solve all your data challenges. Take a free demo with us today and steer your business in the right direction.