The advent of AI has ushered in an era where machine learning models, predictive analytics, and automation have become integral to the fabric of organizations across diverse sectors. AI applications catalyze unprecedented advancements in almost every industry in this digital era. They hold the potential to unravel complex patterns, predict future trends, and streamline operations with an efficiency previously unseen. Yet, amid the promise and potential of AI, the foundation upon which these intelligent systems stand is the very data they consume, analyze, and learn from. As organizations harness the power of AI to glean insights, automate processes, and make informed decisions, data quality becomes paramount.

The success or failure of AI initiatives is intricately tied to the quality of this foundational data. Data quality solutions play a significant role in mitigating biases and enhancing predictions to ensure ethical AI deployment. In this blog, let’s discover how data quality is the key to unlocking unprecedented possibilities in the era of AI.

Role of data quality in AI

Have you ever wondered why some artificial intelligence applications excel while others fall short of expectations? The underlying data quality significantly impacts an AI project’s success and failure. Data quality is pivotal in shaping AI models’ accuracy, reliability, and trustworthiness. In the rapidly evolving landscape of artificial intelligence, the performance of AI applications often hinges on a fundamental but frequently overlooked factor: the quality of the underlying data. Picture the scenario: a medical AI model designed for predictive analytics in healthcare. The stakes are high, as the predictions generated by the model can directly impact patient outcomes. If the data used to train this AI system contains inaccuracies or crucial omissions, the consequences could be severe, leading to misguided predictions that might compromise the well-being of patients.

Beyond the realm of technology, data quality emerges not merely as a technical detail but as a strategic imperative for organizations venturing into the realm of AI. The accuracy of AI predictions is intrinsically timed to the integrity of the data employed during the training phase. However, the significance of it extends beyond mere accuracy—it becomes vital for building trust in AI systems. As industries increasingly integrate AI into decision-making processes, the trustworthiness of these systems becomes paramount for their widespread acceptance and utilization. Organizations are prompted to view it as an integral part of their strategic initiatives. It is synonymous with mitigating risks, reducing biases, and establishing a robust foundation for adaptable and scalable AI solutions.

Critical data quality elements to enhance AI models

Data quality is the lifeblood of successful artificial intelligence applications, and several key components contribute to ensuring its effectiveness. Here are the key components to consider while ensuring data quality in AI projects:

Accuracy

Accuracy is fundamental to quality data. It pertains to the correctness of the information within the dataset. Inaccurate data will result in flawed training and predictions. Ensuring data is free from errors, inconsistencies, and outdated information is paramount for building trustworthy AI models.

Completeness

Complete datasets contain all the necessary information required for a specific task. Incomplete data, where certain fields or entries are missing, can hinder the learning process of AI models. A complete dataset helps the user ensure the AI model understands data patterns and relationships clearly.

Consistency

Consistency ensures that the same data format is followed across different sources and over time. Inconsistent data can introduce confusion and errors into AI models. Standardizing formats, units, and conventions helps maintain consistency and data reusability across different AI projects in an organization.

Relevance

Quality data is relevant to the objectives of the AI model. Defining and maintaining the relevance of data to the specific task at hand is essential for optimizing AI performance. Also, it directly impacts the AI model’s ability to discern meaningful patterns.

Validity

Validity ensures the data accurately represents the real-world entities or phenomena it aims to capture. Rigorous validation processes help identify and rectify any discrepancies in the dataset.

Uniqueness

Uniqueness involves avoiding duplications within the dataset. Duplicate entries can skew model training and evaluation, leading to overemphasis on specific patterns. Deduplication processes are essential to maintaining the integrity and accuracy of the data.

Challenges to ensure data quality

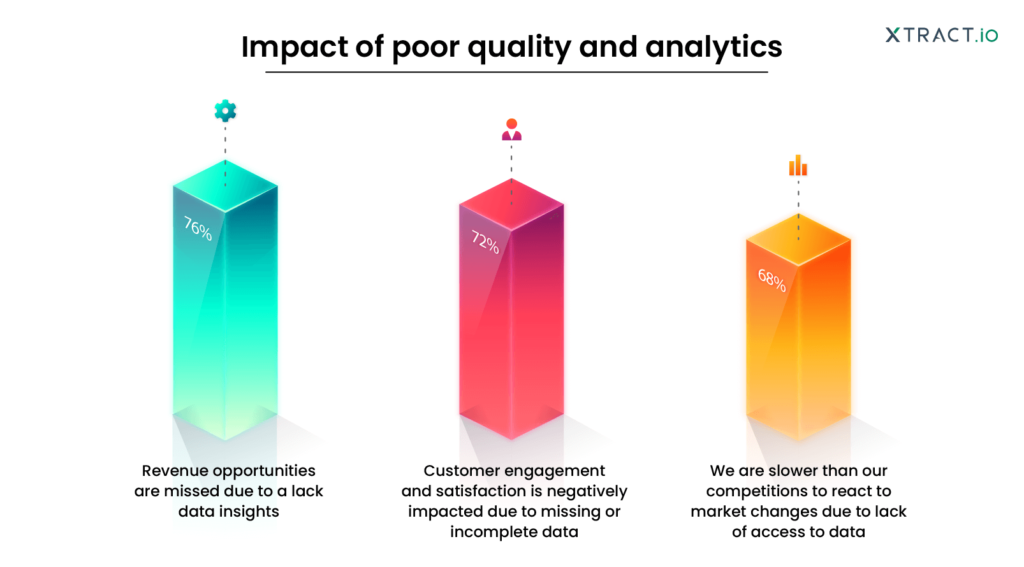

Ensuring data quality in artificial intelligence (AI) poses multifaceted challenges, from managing the sheer volume and complexity of data to addressing issues of accuracy, integration, and security. According to a recent study, over 80% of organizations cite poor data quality as the primary reason for their AI and machine learning (ML) project failures. The magnitude of data-related challenges is exemplified by instances where inaccurate or incomplete data has severe consequences, such as in predictive analytics within healthcare. For example, a recent study found that incomplete or erroneous patient data for training medical AI models could lead to potentially harmful misdiagnoses and treatment plans.

Beyond accuracy, challenges like data bias and fairness present ethical concerns. Biased training data can perpetuate discriminatory outcomes in AI applications, impacting various sectors. These challenges underscore the need for a comprehensive approach to data quality, encompassing technological solutions, robust governance frameworks, and cultivating a skilled workforce with expertise in data management and AI.

Addressing these challenges is crucial for the success of AI initiatives and the overall trust and acceptance of AI technologies. Organizations must invest in robust solutions to continuously enhance data quality, recognizing that AI models’ accuracy and reliability are intrinsically tied to the integrity of the underlying data. As the AI landscape continues to evolve, the ability to navigate and overcome these challenges will be a determining factor in realizing the full potential of AI for informed decision-making and innovation across industries.

Best data quality practices

Achieving success in artificial intelligence projects hinges on the establishment of a robust strategy for data quality. Here’s a comprehensive plan to ensure precision and, consequently, the success of AI projects:

Define clear objectives

Clearly define the objectives of your AI project and the metrics that will measure its success. Knowing the specific goals helps identify the critical data attributes and quality standards necessary to achieve accurate and meaningful outcomes.

Comprehensive data profiling

Conduct thorough profiling to understand your data’s characteristics, patterns, and quality. This involves assessing completeness, accuracy, consistency, and other relevant metrics. Robust data quality solutions and algorithms can assist in identifying anomalies and outliers that may affect data quality.

Robust data quality solutions

Manually verifying and ensuring the immense datasets is time-consuming and error-prone. So, it is essential to start adopting automated data quality solutions to ensure the success of your AI projects.

Periodic updates

Regularly updating and evolving your data quality strategy to align with changing business needs, technologies, and industry standards is essential. This flexibility ensures that innovative ideas and strategies remain relevant.

Final thoughts

As organizations navigate the evolving landscape of artificial intelligence, they must recognize that the success or failure of AI initiatives hinges on the quality of the data that fuels these systems. From mitigating biases to ensuring ethical deployment, data quality has become a strategic imperative. The careful consideration of data accuracy, completeness, consistency, relevance, validity, and uniqueness, coupled with the proactive addressing of challenges, sets the stage for a future where AI can be a trusted and transformative force across industries.

Embracing the best data quality solution is not merely a step but the path toward realizing the full scope of AI’s capabilities in shaping a more informed and innovative future. At Xtract.io, we offer the best data quality solution, customizable to your changing AI requirements. Connect with us today and achieve high-quality data at an affordable cost.