Eating a bowl of noodles has never been easy for me. Now I don’t blame the chopsticks (yet to learn how to use ‘em) but my aversion towards the cabbage in the noodles. Sorting through those yummy strands, I neatly pick out the shreds of cabbage before gobbling the entire lot.

How did I differentiate a strip of cabbage from a thread of noodles? Would have never given it a thought, if not for the growing importance of imitating the model of the human neurons in the technological space.

In an attempt to replicate the much-marveled human intelligence, voluminous efforts are taken to turn machines into rational mortal-like beings. This isn’t just another fad, but sprouted as a thought to Alan Turing who believed in training machines to learn from past experiences.

Soon machines started playing checkers and chess beating human champs. But as fun as games were, a bigger lesson learnt was how useful artificial systems would become, if only they could “learn” like we do and apply acquired intelligence in real-life scenarios – think self-driving cars.

The Evolution of Learning

Programming routines achieved a level of automation yet the logic and code had to be fed by humans. The concept of Artificial Intelligence, though initially linked with magical robots soon narrowed down to predictive and classification analysis.

Decision trees, clustering, and Bayesian networks were identified as a means to predict users’ music preferences and classify notorious junk mails. While such traditional ML approaches provide a simple solution to many classification problems, a better way was sought to seamlessly identify speech, image, audio, video, and text just like a human would. This gave birth to various deep learning methods that relies mainly on the best learning mechanism ever – the human neuron and has in recent times made tech giants like Facebook, Amazon, Google go ga ga over deep learning.

Though the interest has widened recently, Frank Rosenblatt designed the first Perceptron that simulated the activity of a single neuron way back in 1957. How could I not pull the example of the brain’s working here!

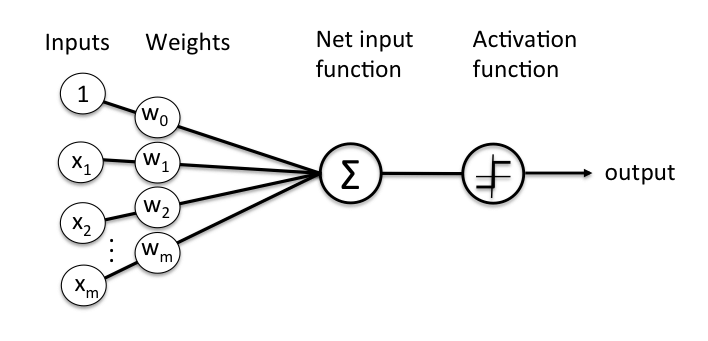

While decoding the working of the human brain is by itself quiet elusive, what we do know is that the brain amazingly identifies objects and sounds by the propagation of electrical signals amidst layers of dendrites and triggers a positive signal when a threshold value is crossed. A perceptron, as found below, was devised keeping this in mind.

Image Source : Medium

The output is fired when the weighted sum of the inputs crosses a threshold value. Now what we have here is just a single layer perceptron which works only on linearly separable functions. Something as easy as drawing a single line and saying all white sheep on one side and the black on the other side. Which is not the case in the real world.

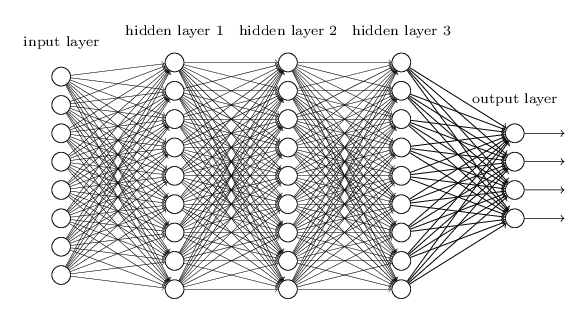

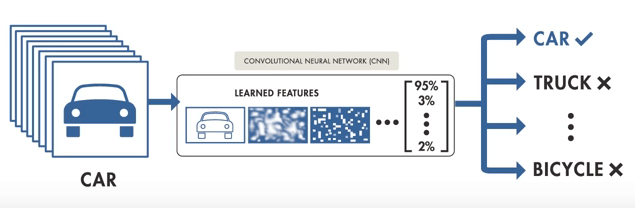

Image recognition – one of the main areas of application for neural networks, deals with recognizing a lot of Features hidden behind pixels of data. To decipher these features, a multi-layer perceptron approach is taken. Similar to a perceptron, an input layer where the training data is fed and the output layer is seen, with a number of “hidden layers” between them.

Image Source: http://neuralnetworksanddeeplearning.com/chap6.html

The number of hidden layers determines how deep the learning is and finding the right number of layers works on a trial and error basis. The “learning” part in these neural networks was the way in which these layers adjusted the weights assigned to them initially.

While various learning types are seen, backpropagation is seen as a common approach wherein random weights are assigned, the output seen is compared with the test data and the error in output is calculated comparing the two (ie. actual output Vs expected output). Now the layer immediately closer to the output layer adjusts it’s weights leading to weight adjustments in the subsequent inner layers till the error rate is reduced.

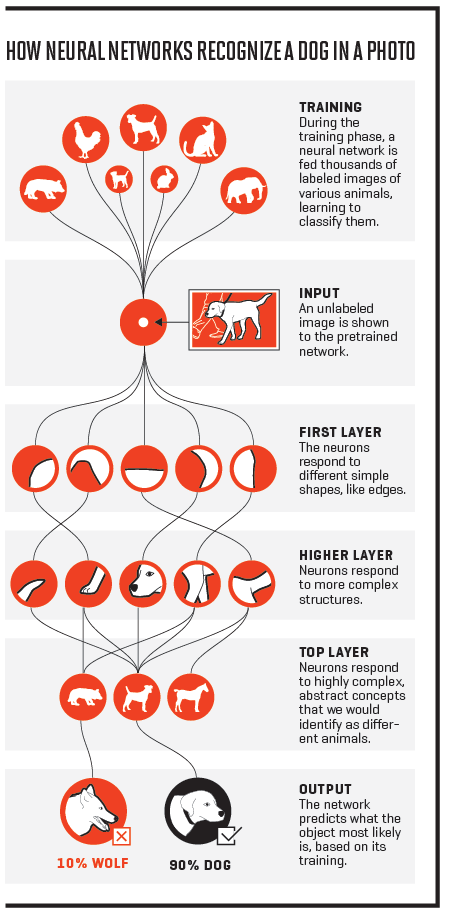

A high-level yet practical way of seeing the hidden layers in action can be seen in the below image. I do owe an apology to cats and dogs, what with the innumerable times they have pulled into the “learning” experiments! Data annotation tools like Mojo helps to label these data sets to help in easy learning and correlating.

Simple as it may look in the example that each layer corresponds to a particular feature, the interpretability of how the hidden layers work is not as easy as it seems. This is because in typical unsupervised learning scenarios, the hidden layers are likened to black boxes, that do what they do but the reasoning behind every layer remains a mystery like the brain.

Image Source: https://fortune.com/longform/ai-artificial-intelligence-deep-machine-learning/

Anyway, how is deep learning any different from other Machine learning approaches?

A single line answer would be the amount of training data involved and the computational power needed.

Before I elaborate on the differences, one has to understand that deep learning is a means to achieve machine learning and there exists many such ML methods to achieve the desired learning levels. Deep learning is just one among them that is gaining rapid popularity due to the minimum levels of manual intervention needed.

Image Source: Matlab tutorial on introduction to deep learning

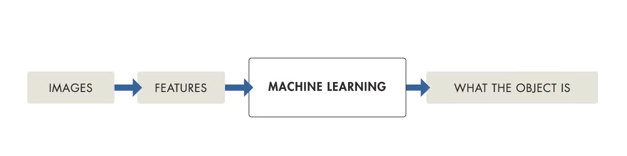

That being said, traditional ML models need a process called feature extraction where a programmer has to explicitly tell what features must be looked for in a certain training set. Also when any one of the features is missed out, the ML model fails to recognize the object in hand. The weightage here was on how good the algorithm was ie. a programmer had to keep all the possible guesses in mind. Else, varied signposts and a myriad of objects on the road would be difficult for a driverless car to detect, making such cases life-threatening.

Deep Learning , on the other hand is fed with large datasets of diverse examples, from which the model learns for features to look for and produces an output with probability vectors in place. The model “learns” for itself just as we learnt numerical digits as kids.

Image Source: Matlab tutorial on introduction to deep learning

Great! So why didn’t we start off with deep learning earlier?

The multilayer perceptron and backpropagation methods were devised theoretically in the 1980s yet due to lack of huge amount of data and high processing capabilities, the muse died down. Since the advent of big data and Nvidia’s super powerful GPUs, the potential of deep learning is being tried and tested like never before.

Now a lot of debate has gone into how large datasets for neural networks need to be. Though some claim that smaller yet diverse datasets would do, the more parameters you want the deep learning model to learn or as complex as the problem in hand gets, so does the data required for training increase. Otherwise, the problem of having more dimensions yet small data results in overfitting which means your model has literally mugged up it’s results and works only for the set you trained, rendering the layer learnings futile.

To validate the necessity of large data, let’s see three successful scenarios of large volume training datasets utilized for neural networks

- The famous modern face recognition system of Facebook termed aptly as “DeepFace” deployed a training set of 4 Million facial images of more than 4000 identities and reached an accuracy level of 97.35% on labeled sets. Their research paper reiterates in many places how such large training sets helped in overcoming the problem of overfitting.

- Alex Krizhevsky – the one who developed Alexnet and joined Geoffrey Hinton in the Google Brain undertaking and fellow scholars, described a learning model for robotic grasping involving hand-eye coordination. To train their network a total of 800,000 grasp attempts were collected and the robotic arms successfully learnt a wider variety of grasp strategies.

- Andrej Karpathy, Director of AI at Tesla during his Ph.D. days at Stanford used datasets for building deep learning models with neural networks for dense captioning – identifying all parts of an image and not just a cat! The team had used 94,000 images and 4,100,000 region-grounded captions by which improvements were seen in speed and accuracy.

Andrej also claimed that the motto them he believed in was to keep his data large, algorithms simple and labels weak.

So how large is your data?

Supporting the other side of the argument is a recent research paper on deep face representations using small data, face recognition problems were solved with 10,000 training images and found to be on par with that trained with 500,000 images. But the same hasn’t been proved for other places where deep learning is currently involved – speech recognition, vehicle, pedestrian, and landmark identification in self-driving vehicles, NLP, and medical imaging. A new aspect of Transfer learning has also been found to require large volumes of pre-trained datasets.

Till that is proved, in case you are looking to implement neural networks in your business like how the sales team at Microsoft is using NN to recommend prospects to be contacted or offerings to be recommended, you need access to an extensive dataset as asserted by Andrew Ng, former chief data scientist at Baidu and popular deep learning expert, who equates deep learning models to rocket engines that requires loads of fuel which is data.

Data intelligence plays a crucial role for Sales and Marketing leaders to growth hack their way to success. Read this whitepaper on how high-quality data helps to strategize your campaigns for better brand experience and conversions.

Now while I get back to picking out the cabbage from my noodles (thanks to my amazing brain), why don’t you start feeding your artificial neural network with accurate and high-quality deep learning datasets.